Learn more about what we do...

By non-invasively attaching electrodes to the scalp, it is possible to pick up the brain’s naturally-occurring electrical activity. Not all brain activity can be measured this way, but we can obtain signals from large populations of neurons that have the right spatial configuration and that are active at about the same time. Luckily, this is true for many neurons in the cerebral cortex, which is critical for perception, attention, memory, language, and higher cognition.

The on-going electrical activity of the brain is known as the electroencephalogram or EEG. Recordings of the EEG play an important role in clinical contexts, as they reveal information about brain health and states of alertness. For psychological research, we are interested in that part of the EEG signal that is specifically related to an event of interest — for example, the appearance of a sensory stimulus or a participant’s response. These are small signals that are hard to see in the on-going EEG. Thus, we use procedures to extract these signals to create Event-Related Potentials (ERPs), i.e., brain activity that is time-locked (lined up in time) to events of interest.

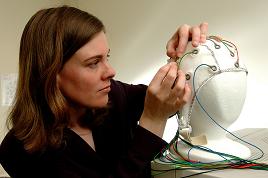

In our lab, ERPs are measured by putting a stretchy cap with a set of electrodes embedded in it on a participant’s head. Additional electrodes are attached with tape to the face and neck. These electrodes make contact with the skin through some conductive gel. Having the cap put on the head takes between 30 and 60 minutes as the electrodes are lined up, the area under each electrode cleaned (a little “exfoliation” treatment) and the gel applied (“moisturization”). During this “spa treatment” participants may study, read, listen to music and/or chat with the experimenters. Most people find the procedure a little strange but not unpleasant. Once the electrodes have been applied, participants move from the preparation area to an experimental booth, where they are seated in front of a computer. The electrodes are attached to amplifiers (which make the small brain signals larger), and the participants then spend around an hour doing a task at the computer. Participants are also given the opportunity to see their brain activity as it unfolds. At the end of the experimental task, the electrodes are removed and participants have the opportunity to clean up before leaving the lab. The data will be processed by the experimenters over the next few days or weeks.

Language comprehension, and especially its implementation in the brain, is challenging to study. There isn’t a typical behavior that arises when people comprehend something, and animals do not exhibit the full range of human language abilities. Language also unfolds over multiple time scales and includes important mechanisms that arise extremely rapidly — on the order of milliseconds — and that thus cannot be examined using slow spatial brain imaging methods. Event-related potentials (ERPs), therefore, provide an especially critical view of language processing, as they have high temporal resolution and can be recorded non-invasively in humans who are simply reading or listening for comprehension. An important part of our laboratory’s research has been to uncover and characterize brain electrical activity associated with language processing.

Our work has helped to characterize a negative-going wave of activity peaking around 400 ms after the onset of a meaningful stimulus. This “N400” ERP component arises from activity in brain areas important for meaning processing. Research in the laboratory has used the N400, along with other ERP and behavioral responses, to build an understanding of the timecourse and mechanisms by which meaning information is derived from individual words (as well as pictures, scenes, and other meaningful stimuli) and combined to create a larger message.

For example, a deep and long-standing assumption is that words (and other meaningful objects) are recognized before their meaning can be “looked up” in memory and that this recognition process requires different amounts of time for different items. Yet, our work has shown instead that brain activity associated with initial meaning processing is temporally invariant. Therefore, we have hypothesized and demonstrated that, contrary to the established view, meaning-related information that is distributed across brain areas is bound together by temporal synchrony, such that initial meaning access occurs in a fixed time window relative to encountering a word.

Although the N400 (see above) reflects meaning information activated in response to a word, it is of course not the case that activation states in semantic memory (the brain’s repository of meaning information) are shaped solely by the word currently being processed. An important contribution of our research has been to contest the prevalent assumption that language comprehension is a passive process of looking up word meanings. Instead, we provided some of the first evidence that the brain uses context information to predict – that is, to anticipate and prepare for likely upcoming stimuli. Initially controversial, our views have now become mainstream, and understanding the scope and nature of prediction mechanisms has become a centerpiece of modern language research.

Our laboratory continues to lead this line of work, providing evidence that predictions can be quite specific and that they are advantageous for dealing with a rapid, noisy, often ambiguous input stream — but can also engender processing consequences, such as the need for revision when expectations are disconfirmed. Our work was also one of the first to link anticipatory processes in language comprehension to language production mechanisms in the left hemisphere.

The brain is made up of two hemispheres, the right and the left, and they are very similar in their anatomy and their physiology. Yet, a striking number of differences in how the two hemispheres function have been described, ranging from differences in the processing of basic sensory features to differences in emotion, language, and problem solving. This disparity between the general neural similarity of the two hemispheres and the distinctiveness of their functions highlights the limits of our current understanding of the mapping between neural and functional properties. Whereas there is now a better sense of which brain areas may be involved in various language functions, the computations that these brain areas are performing are not yet understood well enough to explain why a left hemisphere (LH) area is crucially involved in a given function while the corresponding right hemisphere (RH) area, with the same basic cell types, neurochemistry, and inputs and outputs, is not. In the CABLab, we conduct studies designed to understand how and why the hemispheres differ in how they comprehend and remember the world, as well as how they work together during complex cognitive tasks like language processing.

To examine hemispheric differences in meaning processing, we use what is known as the visual half-field presentation method, which we combine with the measurement of brain electrical responses (ERPs). The visual half-field technique takes advantage of the fact that information presented in peripheral vision on the right side is sent first to the left hemisphere, and, correspondingly, information in left peripheral vision is sent first to the right hemisphere. Although the hemispheres can share information (across the corpus callosum, a bundle of nerve fibers connecting the two hemispheres), giving one hemisphere information more directly gives it a processing advantage, which reveals differences between the hemispheres.

Using this technique, we have shown that language comprehension is accomplished via multiple mechanisms, distributed across the two hemispheres of the brain. Our studies have shown that some assumptions that have been made about how the hemispheres function do not hold up when we measure brain activity rather than just behavior. For example, although some theories have argued that the right hemisphere represents the meaning of words more “coarsely” than the left, and that the right hemisphere cannot build a representation of the meaning of an entire sentence (as opposed to a single word), our ERP studies have consistently indicated that the right and left hemispheres represent basic aspects of meaning fairly similarly, and that the right hemisphere can construct the meaning of a sentence. Although both hemispheres seem to be able to use world knowledge to comprehend sentences, however, they also use context information differently. We have put forward a theoretical framework (PARLO: Production Affects Reception in Left Only) for understanding the pattern of ERP data in the context of previous neuropsychological and behavioral results. In particular, we have found that the left hemisphere (which is better at language production) seems to make predictions about what is likely to happen next (such as what word will come up in a sentence) and to get ready to process those inputs. In contrast, the RH language network seems to be more feed-forward in nature, which also affords more veridical memory for verbal material. In addition, we showed that the RH enriches language comprehension through the engagement of sensory imagery. PARLO integrates a range of findings in the word recognition, sentence processing, and verbal memory literatures, and not only provides a basis for understanding asymmetries but, critically, offers a reconceptualization of normal processing as the emergent product of multiple mechanisms unfolding cooperatively.

Normal aging causes widespread changes in the brain, many of which could be expected to impact language functions. However, in the absence of disease (such as Alzheimer’s) or stroke, which can have serious consequences for language, it is generally found that older adults perform much like younger adults on language comprehension tasks, and, indeed, that vocabulary and other kinds of knowledge can increase throughout the life span. This similar level of performance, however, may come about through different underlying brain processes.

For example, recordings of brain electrical activity have revealed changes in the pattern of responses to words in context. In particular, younger adults show facilitation (smaller N400s) for incongruent words that are related to an expected completion, suggesting that they are using sentence context to predict likely upcoming words. In contrast, older adults as a group elicit N400 responses that pattern primarily with plausibility (the fit of the word to the sentence), suggesting that they do not make predictions, but instead wait for the word and integrate it with the sentence context information. There is substantial variability among older adults, however, which is correlated with factors such as vocabulary and verbal fluency (ability to come up with words rapidly).

Our research program, which is funded by the National Institute on Aging, is designed to try to understand how language comprehension changes with age. In particular, we aim to uncover which aspects of language change most with normal aging (e.g., word or sentence processing), how individuals differ (and what predicts those differences), and how language and memory abilities are linked. We also examine the underlying brain mechanisms, with a special interest in how the two hemispheres work together during language processing and how the contributions of each, and the balance of labor between them, change over the lifespan. Ultimately, we hope to understand how language comprehension is achieved by the brain in adults of all ages, and what factors can compensate for or protect against age-related changes in language ability.

Individual differences suggest the possibility of plasticity in patterns of neural connectivity and resulting language comprehension outcomes. We have traced the source of some individual differences in comprehension to variations in hemispheric dominance, which, in turn, are linked to heritable factors related to handedness. These biological differences, however, are plastic – they change with age, for example. Even within a given individual of a given age, we have shown that, contrary to what is sometimes assumed, language processing mechanisms are not static. Our work has shown that the processing dynamics involved in comprehension can be modulated by factors such as stimulus predictability, task demands, and control – yielding different functional outcomes.

For example, we have shown that lower-functioning older adults can adopt strategies that afford good comprehension, when given more control over the input. This adaptation is strikingly dynamic: Comprehenders process differently depending on the cognitive load induced by a particular sentence and the moment-to-moment availability of different cognitive resources. In tracking how comprehension mechanisms and the dynamics of their interactions differ within and across individuals over the lifespan, we hope to discover factors that will help comprehenders make better use of context, manage ambiguity, and, ultimately, more effectively comprehend and remember meaningful inputs across task circumstances.